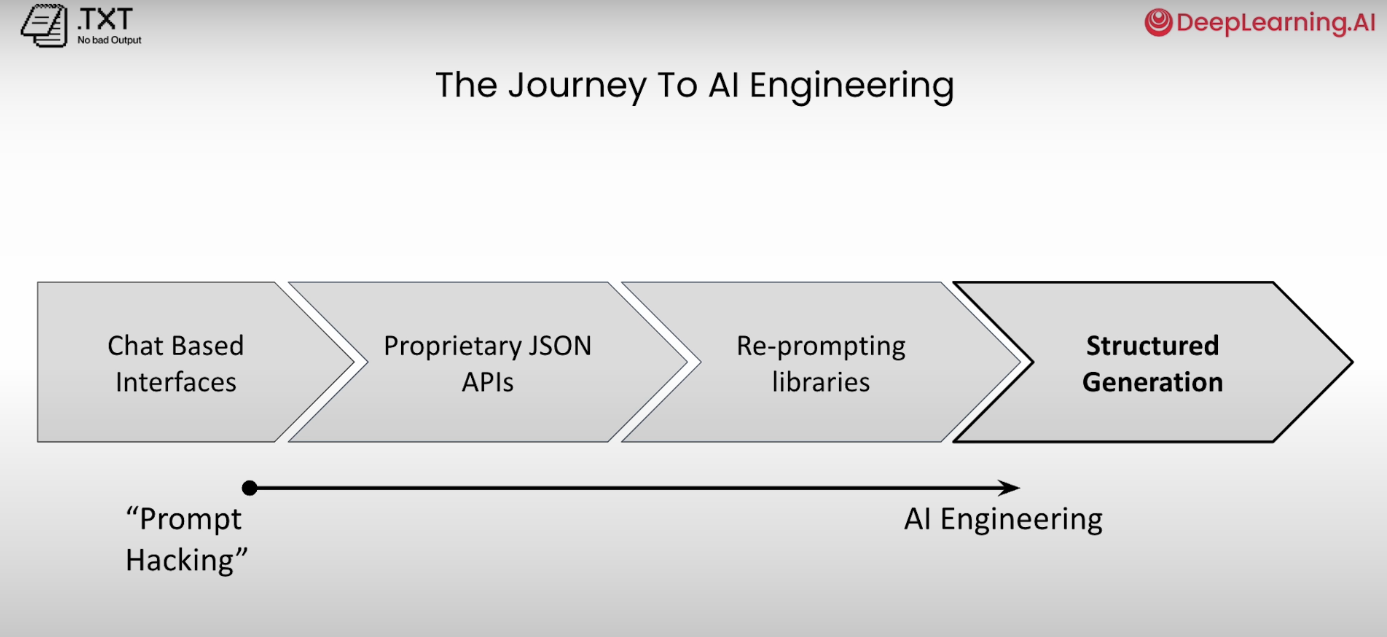

Overview

- Chat based interface is not scalable

- using json outputs helps us to call APIs as needed. But issue is it is tightly coupled with service provider libraries.

- re-prompting helps us to be more flexible and try out in other models. This enables building re-usable software libraries that can use different LLM providers.

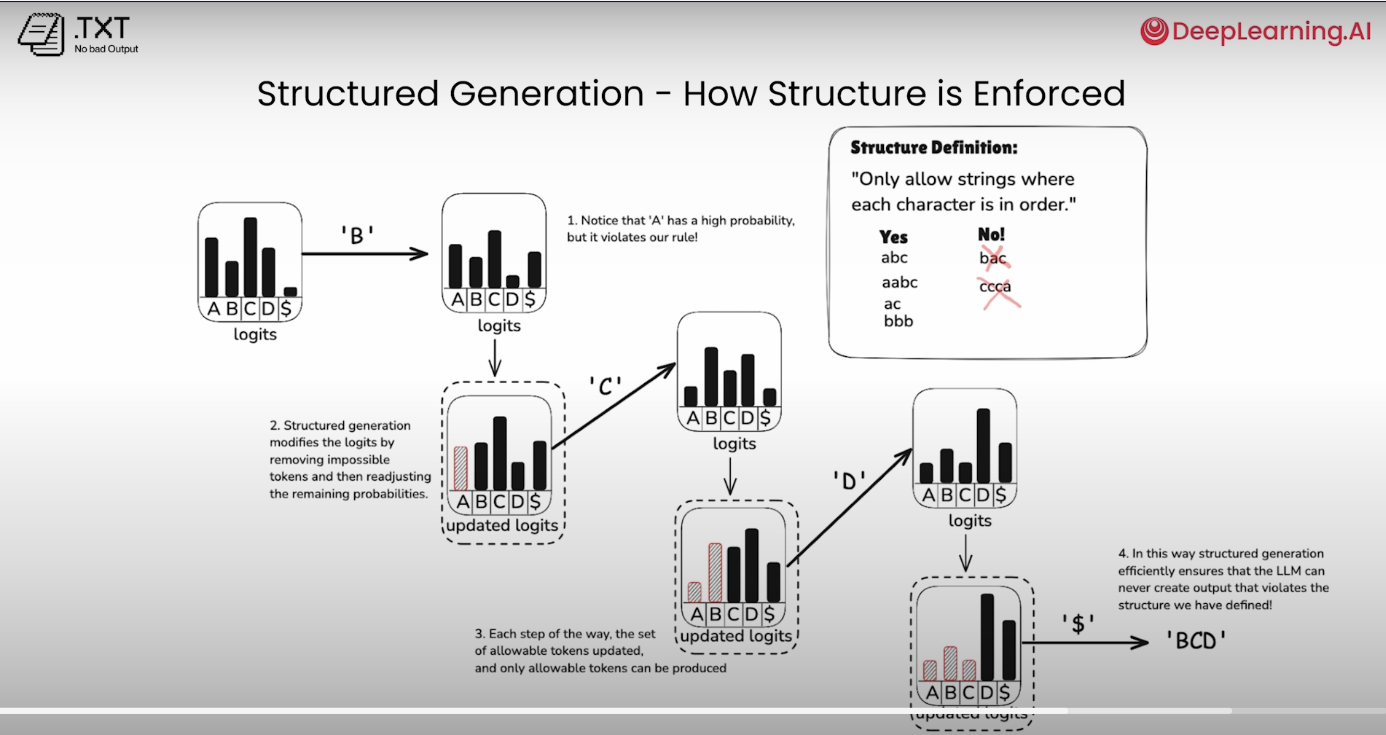

- there is a big gap between the code and model itself in using all of the above. Using structured generation you can hack the logits matrix of the model and let it do what you want.

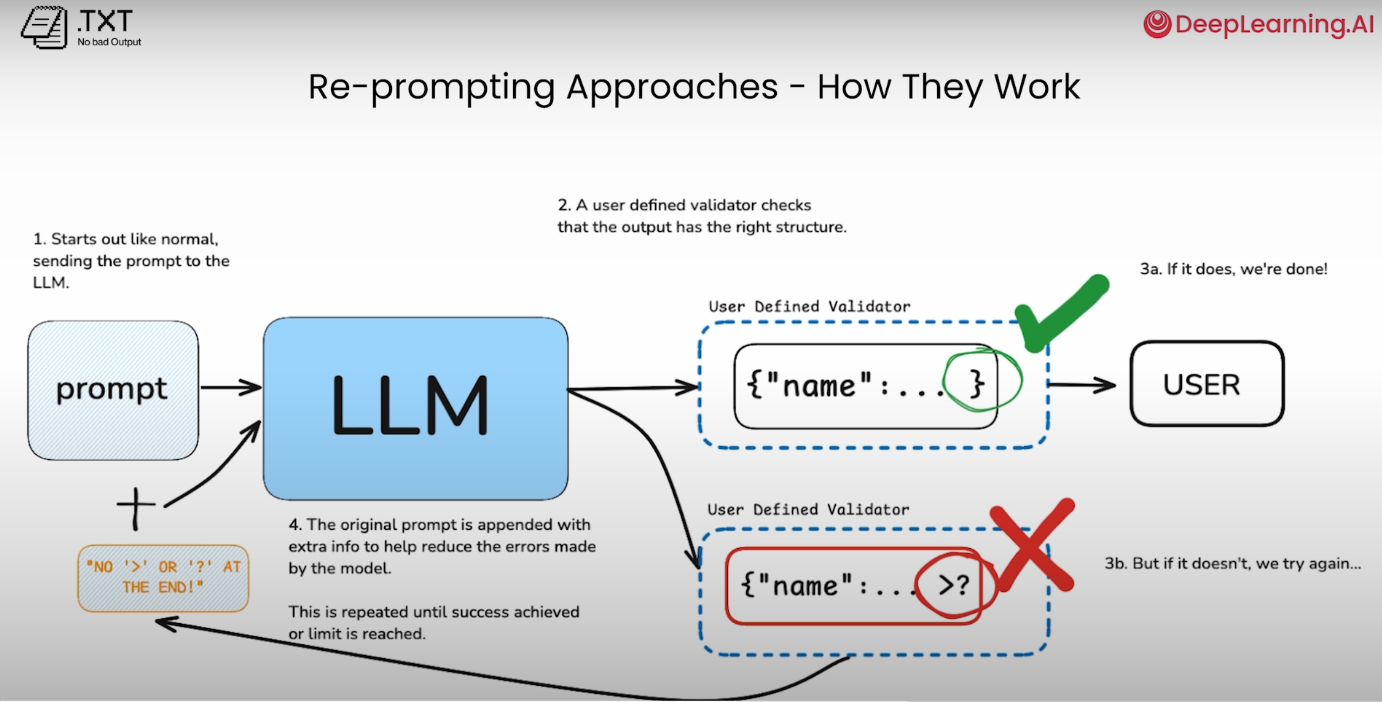

Reprompting

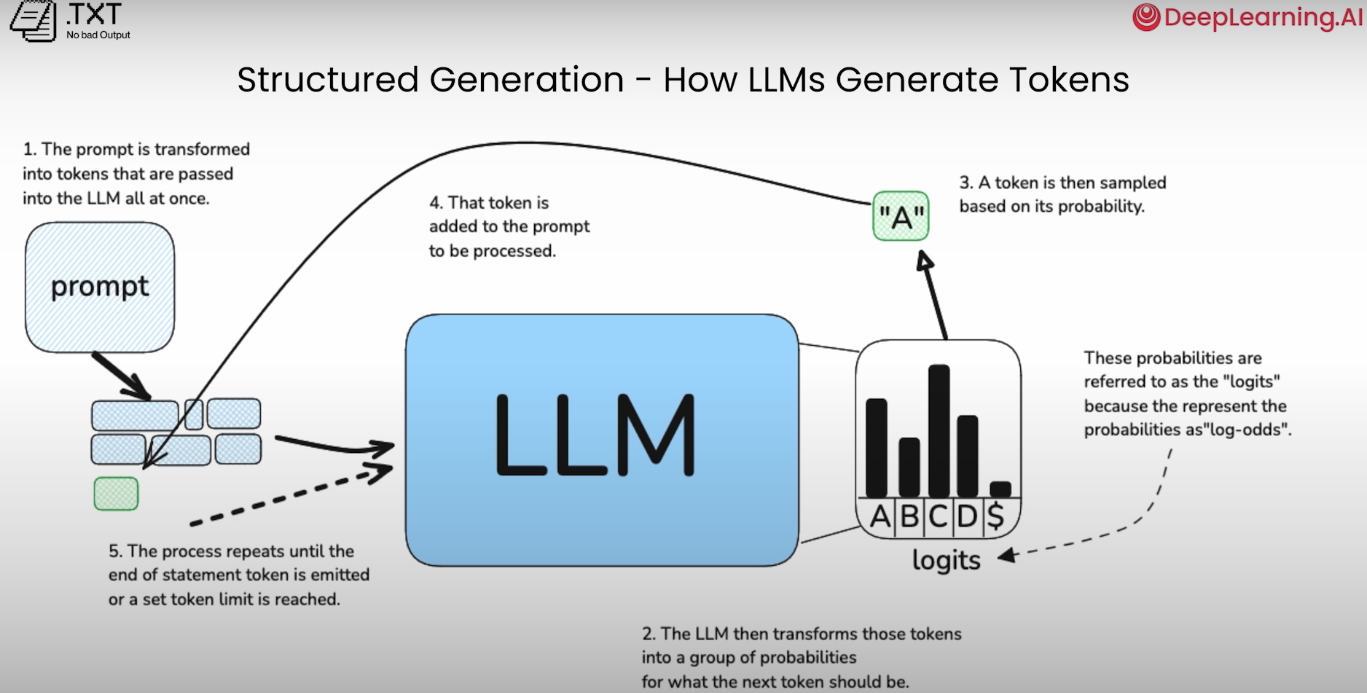

Structured generation

Works with the model at the point of token generation to ensure that models can only sample exactly the structure you define.

Libraries -

workflow

Pros and cons

Pros

- Works with any open LLM

- Very fast

- Can improve inference time

- Provides higher quality results

- Works in resource constrained environments

- Allows for virtually any structure

- JSON

- Regex

- Even syntactically correct code

Cons

- Works only with open LLMs as it requires control over the model. Or you can hosting your own proprietary model.