What is a Large Language Model (LLM)?

Describe how transformer architecture enables LLMs to handle long-range dependencies in text.

Large Language Models (LLMs) are advanced AI systems designed to understand and generate human-like text. They leverage the transformer architecture, which uses attention mechanisms to process and relate different parts of the input text, enabling them to handle long-range dependencies effectively. This allows LLMs to maintain context over longer passages, making them capable of generating coherent and contextually relevant responses.

📖 What is a Large Language Model (LLM)?

A Large Language Model (LLM) is an artificial intelligence system trained on vast amounts of text data to understand, generate, and interact with human language. It’s based on deep learning architectures — most notably the transformer architecture — and is capable of tasks like:

- Text generation

- Translation

- Summarization

- Question answering

- Code generation, etc.

LLMs are “large” because they contain billions (or even trillions) of parameters — these parameters are tuned during training to capture patterns, meanings, grammar, facts, and reasoning within human language.

Examples: GPT-4, LLaMA 3, PaLM 2, Claude 3.

⚙️ How Transformer Architecture Enables LLMs to Handle Long-Range Dependencies

A key challenge in natural language processing (NLP) is capturing long-range dependencies — relationships between words or phrases that are far apart in the text. Traditional architectures like RNNs and LSTMs struggled with this due to sequential processing and vanishing gradients.

The Transformer architecture, introduced in Vaswani et al., 2017 (“Attention is All You Need”), overcomes this using a mechanism called self-attention.

📌 Key Components of the Transformer:

-

Self-Attention Mechanism

- Every word (token) in a sentence can attend to every other word at once.

- Computes a weighted representation of all other tokens for each token.

- This enables the model to directly capture relationships between distant words without passing information step-by-step.

-

Positional Encoding

- Since transformers process tokens in parallel (unlike sequential RNNs), positional encodings inject information about token positions, so the model knows the order of words.

-

Multi-Head Attention

- Allows the model to capture different types of relationships in parallel — for example, syntactic vs. semantic dependencies — by using multiple attention “heads.”

-

Feedforward Layers

- Apply nonlinear transformations to enrich token representations after attention.

-

Layer Normalization and Residual Connections

- Help stabilize training and preserve information flow across layers.

📊 How This Handles Long-Range Dependencies:

-

Direct Access: In self-attention, each token computes relationships with every other token directly through dot-product attention — no information bottleneck as in RNNs.

-

Parallelism: Unlike RNNs that process tokens sequentially, transformers process entire sequences in parallel, enabling efficient learning of global context.

-

Attention Weights: The attention matrix explicitly shows which tokens focus on which others, making it easy to model dependencies no matter how far apart.

📐 Attention Formula (Scaled Dot-Product Attention):

\[\text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right) V\]Where:

- $Q$ = Query matrix

- $K$ = Key matrix

- $V$ = Value matrix

- $d_k$ = dimension of keys

This computes a weighted sum of the values based on the similarity between the queries and keys.

📌 Summary

| Traditional Models | Transformer (LLMs) |

|---|---|

| Sequential processing (RNN/LSTM) | Parallel processing (transformers) |

| Limited long-range dependencies | Direct, global attention to all tokens |

| Vanishing gradients | Stable training via attention and residual connections |

| Difficult to scale to long texts | Scalable via self-attention and positional encoding |

✅ Conclusion:

LLMs powered by transformer architectures revolutionized NLP by enabling models to efficiently capture both local and long-range dependencies in text through the self-attention mechanism. This parallel, non-sequential approach made it possible to scale models to billions of parameters and deliver state-of-the-art performance in diverse language tasks.

Compare the encoder-based models (like BERT) with decoder-only models (like GPT).

What are their respective strengths and weaknesses?

📊 Encoder-based Models (e.g., BERT)

🔧 Architecture:

- Uses the Transformer encoder stack only.

- Takes in the entire input sequence at once and builds contextualized embeddings for each token based on both left and right context (bidirectional).

- Typically trained with masked language modeling (MLM): randomly masks some tokens and predicts them.

✅ Strengths:

| Aspect | Benefit |

|---|---|

| Bidirectional context | Considers both left and right context, better for understanding sentence meaning, syntax, and semantics. |

| Excellent for classification tasks | Highly effective at tasks like sentiment analysis, NER, sentence similarity, etc. |

| Fine-tuning friendly | Pretrained on general corpora, then fine-tuned on specific tasks with relatively little data. |

❌ Weaknesses:

| Aspect | Limitation |

|---|---|

| Not generative | Can’t naturally generate text or complete sequences. |

| Inefficient for long text | High memory and compute cost due to full self-attention over long sequences. |

🎯 Typical Use Cases:

- Text classification

- Named entity recognition

- Question answering (extractive)

- Text entailment

- Embedding generation for similarity/search

📊 Decoder-only Models (e.g., GPT)

🔧 Architecture:

- Uses the Transformer decoder stack only.

- Autoregressive: Predicts the next token based on left context (causal masking ensures it doesn’t look ahead).

- Trained with causal language modeling (CLM): predicts the next token in a sequence.

✅ Strengths:

| Aspect | Benefit |

|---|---|

| Natural text generation | Excellent at generating coherent, creative, and contextually rich text. |

| Flexible zero-shot/few-shot learning | Can perform a variety of tasks by framing them as text generation problems. |

| Handles open-ended tasks | Better suited for summarization, translation, story generation, and dialogue. |

❌ Weaknesses:

| Aspect | Limitation |

|---|---|

| Unidirectional context | Only considers left context, limiting deep understanding of entire input sequences. |

| Weaker at classification-style tasks | Requires task reformulation as generation (e.g., “The sentiment is [positive/negative]”). |

| Scaling inefficiencies | Like BERT, can be memory-heavy on long sequences, though solutions like GPT-NeoX and GPT-4 optimize this somewhat. |

🎯 Typical Use Cases:

- Text generation

- Summarization

- Code generation

- Conversational agents

- Zero/few-shot task execution

📌 Summary Table:

| Feature | Encoder-based (BERT) | Decoder-only (GPT) |

|---|---|---|

| Architecture | Transformer encoder | Transformer decoder |

| Context modeling | Bidirectional | Unidirectional (left-to-right) |

| Pretraining task | Masked Language Modeling (MLM) | Causal Language Modeling (CLM) |

| Strength | Understanding, classification | Natural language generation |

| Weakness | Not generative, poor at long text | Limited context for input comprehension |

| Typical applications | NER, sentiment analysis, QA | Chatbots, story generation, summarization |

📌 Visual Aid: Transformer Architecture Positioning

┌──────────────┐

│ Input Text │

└────┬─────┬───┘

│ │

┌─────▼──┐ ┌▼─────┐

│Encoder │ │Decoder│

└────────┘ └───────┘

- BERT: only uses the left side (Encoder stack)

- GPT: only uses the right side (Decoder stack)

📖 Summary on usage:

- Use BERT-like models for tasks where understanding of the entire input context is crucial (classification, QA, embeddings).

- Use GPT-like models for tasks requiring fluent, contextually-aware text generation or where framing the problem as text prediction makes sense.

BERT Vs GPT Architecture Overview

graph TD

subgraph BERT [Encoder-based Model: BERT]

A1([Token 1]) --> E1[Encoder Layer]

A2([Token 2]) --> E1

A3([Token 3]) --> E1

E1 --> E2[Encoder Layer]

E2 --> E3[Encoder Layer]

E3 --> O1([Output Embeddings for Classification/QA])

end

subgraph GPT [Decoder-only Model: GPT]

B1([Token 1]) -->|Left context only| D1[Decoder Layer]

B2([Token 2]) -->|Token 1 visible| D1

B3([Token 3]) -->|Tokens 1 & 2 visible| D1

D1 --> D2[Decoder Layer]

D2 --> D3[Decoder Layer]

D3 --> O2([Next Token Prediction / Generated Text])

end

style BERT fill:#D9E8FB,stroke:#4A90E2,stroke-width:2px

style GPT fill:#FFE4C4,stroke:#FF7F50,stroke-width:2px

Explain the concepts of:

• Zero-shot learning

• Few-shot learning

• In-context learning

How are they enabled by LLMs like GPT-3/4?

📖 Concepts Explained:

📌 Zero-shot Learning

🔍 The model performs a task without having seen any labeled examples of that task during training or inference.

How it works:

- You provide the model with a clear, natural-language instruction describing what you want it to do.

- The model relies solely on its pretrained knowledge from vast corpora.

Example (GPT-4):

Q: Translate the sentence 'Bonjour' to English.

A: Hello.

👉 No examples of translations are provided — just a prompt and a response.

📌 Few-shot Learning

🔍 The model is shown a few labeled examples of the task in the prompt itself before making a prediction.

How it works:

- A few input-output pairs are provided in the prompt.

- The model uses those examples to infer the pattern and generate the next output.

Example (GPT-4):

Translate French to English:

French: Bonjour | English: Hello

French: Merci | English: Thank you

French: Au revoir | English:

👉 Model predicts “Goodbye” based on those few examples.

📌 In-Context Learning

🔍 A broader term covering both zero-shot and few-shot setups, where the model learns from the prompt context alone during inference, without updating model weights.

How it works:

- LLMs like GPT-3/4 treat the prompt as a temporary context or memory.

- It conditions its response based on patterns within the prompt rather than relying on parameter updates (as in fine-tuning).

Key idea:

The model “learns” in the moment by inferring patterns from the prompt.

📊 How LLMs like GPT-3/4 Enable This:

| Technique | Enabled by | Why it Works in LLMs |

|---|---|---|

| Zero-shot | Large-scale pretrained knowledge | Massive exposure to varied tasks, making it possible to generalize from instructions. |

| Few-shot | Inference-time prompt conditioning | Autoregressive models like GPT can track patterns within a single prompt window. |

| In-context learning | Transformer architecture with large context windows | Self-attention mechanism allows relating new prompt content to pretrained representations dynamically. |

📌 Visualizing It:

Prompt Window in GPT

[Instruction/Examples] → [Task Input] → [Model Response]

- All happens within one forward pass.

- No model weights are updated.

- The “learning” happens by contextualizing information within the prompt.

📖 Why It Matters:

Before GPT-style LLMs, NLP models typically required:

- Task-specific fine-tuning on labeled data.

- Parameter updates for each new task.

Now, with in-context learning:

- Models like GPT-3/4 can handle unseen tasks at inference time.

- Zero/few-shot setups dramatically reduce the need for labeled data.

- Rapid prototyping and dynamic task execution without retraining.

✅ Conclusion:

This capability — in-context learning — is arguably the most disruptive innovation introduced by large autoregressive LLMs. It transformed them from static, narrow models into highly flexible generalists.

graph LR

subgraph "Zero-shot Learning"

A1([Instruction]) --> A2([Task Input])

A2 --> A3([Model Response])

end

subgraph "Few-shot Learning"

B1([Example 1: Input → Output])

B2([Example 2: Input → Output])

B3([Instruction/Task Input])

B1 --> B2 --> B3 --> B4([Model Response])

end

subgraph "In-Context Learning"

C1([Zero-shot or Few-shot Prompt])

C2([Task Input])

C1 --> C2 --> C3([Model Response])

end

style A1 fill:#E0F7FA,stroke:#00796B,stroke-width:2px

style B1 fill:#FFF9C4,stroke:#F9A825,stroke-width:2px

style C1 fill:#D1C4E9,stroke:#673AB7,stroke-width:2px

You are given a chatbot powered by an LLM. What techniques can improve the relevance, safety, and factual accuracy of its responses?

✅ Techniques to Improve Relevance, Safety, and Factual Accuracy

📌 1️⃣ Prompt Engineering

Crafting better prompts or using prompt templates can guide the model toward more relevant and safer answers.

- Few-shot / zero-shot / chain-of-thought prompting

- Including instructions for safety or constraints (e.g. “answer factually and avoid speculation”)

📌 2️⃣ Retrieval-Augmented Generation (RAG)

Combine the LLM’s generative abilities with external knowledge retrieval systems (e.g., databases, APIs, or search engines).

- Fetch up-to-date factual information at runtime

- Prevent reliance on potentially outdated LLM training data

📌 3️⃣ Fine-Tuning / Instruction Tuning

Retraining or further adjusting the model on task-specific data and safety-verified instructions to:

- Improve domain relevance

- Reduce unsafe outputs through exposure to filtered, aligned data

📌 4️⃣ Reinforcement Learning from Human Feedback (RLHF)

Train the model’s output preferences using human-annotated response rankings:

- Encourage helpful, harmless, and honest completions

- Disincentivize unsafe or irrelevant outputs

📌 5️⃣ Output Filtering & Post-Processing

Apply post-generation filters using:

- Safety classifiers (e.g., NSFW, toxicity, misinformation detection models)

- Factual consistency checkers

Before displaying the final output

📌 6️⃣ External Fact-Checking APIs & Grounding

Use fact-checking tools/APIs (like Google Fact Check API, or custom domain databases) to cross-validate the chatbot’s claims

- Flag potentially inaccurate responses

- Ground critical facts in authoritative sources

📌 7️⃣ Guardrails and Safety Layers

Implement policy enforcement modules to prevent:

- Personally identifiable information (PII) leakage

- Dangerous instructions (e.g. self-harm, illegal activities)

Examples: Microsoft Azure AI Content Safety, Anthropic’s Constitutional AI techniques

📌 8️⃣ Explainability & Uncertainty Calibration

Allow the model to:

- Express uncertainty where appropriate (e.g., “I’m not sure” or “This might not be accurate.”)

- Offer source references or reasoning traces

📌 9️⃣ Human-in-the-Loop (HITL) Oversight

For high-risk or sensitive applications:

- Have humans review and approve responses

- Continuously collect user feedback to retrain or adjust guardrails

✅ Summary Table

| Technique | Improves Relevance | Enhances Safety | Boosts Factual Accuracy |

|---|---|---|---|

| Prompt Engineering | ✅ | ✅ | ✅ |

| Retrieval-Augmented Generation (RAG) | ✅ | ✅ | ✅ |

| Fine-Tuning / Instruction Tuning | ✅ | ✅ | ✅ |

| RLHF | ✅ | ✅ | ✅ |

| Output Filtering & Post-Processing | ✅ | ✅ | ✅ |

| External Fact-Checking & Grounding | ✅ | ✅ | ✅ |

| Guardrails / Safety Layers | ✅ | ✅ | 🔶 (Indirectly) |

| Explainability & Uncertainty | ✅ | ✅ | ✅ |

| Human-in-the-Loop Oversight | ✅ | ✅ | ✅ |

Would you like a diagram, a real-world implementation workflow, or example code snippets for any of these? I’d be happy to help.

graph TD

A(User Input) --> B(Prompt Engineering)

B --> C(LLM Inference)

C --> D1(Retrieval-Augmented Generation)

D1 --> C

C --> E(Post-Processing)

E --> F1(Safety Classifier)

E --> F2(Fact Checker)

E --> F3(Explainability Module)

F1 --> G(Safety Guardrails)

F2 --> G

F3 --> G

G --> H(Final Response)

subgraph Fine-Tuning & RLHF [Offline Tuning & Alignment]

I1(Fine-Tuning on Task-Specific Data)

I2(RLHF using Human Feedback)

I1 --> C

I2 --> C

end

subgraph Human-in-the-Loop [Escalation Path]

G --> J(Flagged for Human Review)

J --> H

end

| Step | Component | Purpose |

| :—– | :———————- | :———————————————————- |

| A | User Input | User sends a message |

| B | Prompt Engineering | Enhance prompt clarity, constraints, and structure |

| C | LLM Inference | Generate initial response based on prompt and model weights |

| D1 | RAG | Fetch external, real-time info for grounding |

| E | Post-Processing | Process LLM output before finalizing |

| F1 | Safety Classifier | Detect unsafe content |

| F2 | Fact Checker | Validate factual accuracy against external data |

| F3 | Explainability Module | Add reasoning, citations, or uncertainty expressions |

| G | Safety Guardrails | Enforce safety policies and remove unsafe replies |

| H | Final Response | Deliver response to the user |

| I1 | Fine-Tuning | Offline training on specialized or safety-focused data |

| I2 | RLHF | Model tuning using ranked human preference feedback |

| J | Human Review (Optional) | Escalation if flagged |

How reliable are hallucination detection and prevention techniques in LLMs during open-domain generation?

hallucination detection and prevention techniques are improving, but they remain an open research challenge in open-domain generation.

- 📌 Why hallucinations happen

- 📌 What current techniques exist

- 📌 How reliable they are

- 📌 What the limitations and future directions are

📌 Why Do LLMs Hallucinate?

LLMs are probabilistic next-token predictors, trained to maximize likelihood over vast, sometimes noisy, text corpora.

In open-domain, unconstrained generation:

- There’s no explicit grounding to factual or verified information

- The model may “fill in” plausible-sounding but incorrect content, especially for rare, unseen, or ambiguous queries

- The training data might contain conflicting, outdated, or fabricated information

📌 Current Hallucination Detection & Prevention Techniques

| Technique | How It Works | Reliability (1–5) | Notes |

|---|---|---|---|

| Retrieval-Augmented Generation (RAG) | Augment generation with live, authoritative retrieved knowledge | ⭐⭐⭐⭐ | High factuality, but dependent on retriever quality |

| Fact Consistency Classifiers | Use a trained classifier to flag inconsistencies between claim and retrieved/documented facts | ⭐⭐⭐ | Promising but can misclassify subtle errors |

| Self-Consistency Sampling | Compare multiple generations to detect outliers or unstable outputs | ⭐⭐⭐ | Reliable for confidence estimation, less so for deep factuality |

| Chain-of-Thought & Self-Reflection Prompts | Ask the model to explain or validate its answer step-by-step | ⭐⭐⭐ | Reduces hallucination but not foolproof |

| Human-in-the-loop Oversight | Human reviews outputs in high-stakes cases | ⭐⭐⭐⭐⭐ | Gold standard but not scalable for all use cases |

| Constitutional AI / Rule-based Filtering | Impose ethical and factual constraints via post-generation rules | ⭐⭐⭐ | Good for safety/ethics, limited for fine-grained factuality |

| Uncertainty Calibration / Confidence Scores | Encourage the model to flag uncertain answers | ⭐⭐ | Current models are poorly calibrated for open-domain facts |

| External Fact-Checking APIs | Validate generated claims against third-party APIs/databases | ⭐⭐⭐⭐ | Highly reliable for verifiable claims |

📌 How Reliable Are They?

- For narrow or closed-domain tasks (e.g. legal documents, medical advice) — hallucination rates can be reduced dramatically using RAG, fine-tuning, and fact-checking

- For open-domain creative or knowledge generation (e.g. summarizing history, answering rare trivia) — hallucination rates remain significant, often between 10–30% even with detection layers

👉 Example:

GPT-4’s own hallucination rate in open-domain factual QA was around 20% in recent benchmarks like TruthfulQA and OpenFact — though retrieval-augmented models like ChatGPT browsing or Bing Chat improved accuracy by 20–40%

📌 Limitations

- High compute cost for multi-sample self-consistency

- Fact-checking coverage gaps for non-structured or emerging facts

- Classifier brittleness when phrasing is nuanced

- Over-reliance on retrieval quality in RAG setups

- Poor uncertainty calibration in current large models

📌 Future Directions

- Multi-agent self-consistency and debate models (e.g. Anthropic’s Claude debate experiments)

- Better fact-grounded pretraining datasets

- Dynamic fact-checking modules embedded within LLM pipelines

- Improved uncertainty estimation techniques

- Semantic verifiers and factuality-focused reward models in RLHF

✅ Summary:

Current hallucination detection/prevention techniques significantly reduce risk but are not yet universally reliable for open-domain generation.

For critical applications (medical, legal, financial, etc.) — RAG, fact-checkers, and human oversight remain essential.

Can synthetic data augmentation (e.g., bootstrapping with smaller models) enhance LLM training efficiency or fairness?

Excellent question — and yes, synthetic data augmentation, including techniques like bootstrapping with smaller models, can meaningfully enhance LLM training efficiency, generalization, and fairness when carefully designed and applied. Let’s unpack this in detail:

📌 How Synthetic Data Augmentation Helps in LLM Training

1️⃣ Efficiency

-

Bootstrapping smaller models (or using smaller LLMs or task-specific models) to generate synthetic training data allows you to:

- Pre-train or fine-tune larger models on richer, more diverse corpora without the expensive cost of collecting and cleaning real-world data.

- Amplify low-resource domains or languages, or rare task-specific examples.

- Reduce the need for costly human annotation by generating pseudo-labeled datasets.

Example:

Use a smaller GPT-2 or instruction-tuned T5 model to generate paraphrases or augment underrepresented classes in a classification task before training a larger model like GPT-4.

2️⃣ Fairness

- Biases in training data are a core problem for LLMs. If certain groups, dialects, or perspectives are underrepresented in the training corpus, the model inherits those disparities.

-

Synthetic data can:

- Balance representation for marginalized dialects, demographic groups, or viewpoints by explicitly generating diverse synthetic content.

- Create counterfactual data: e.g., generate the same sentence with swapped gender, ethnicity, or socio-cultural attributes to measure and mitigate bias.

Example:

Generate balanced QA pairs or dialogue turns where both male and female pronouns are used equally in professional contexts to mitigate occupational gender bias.

📌 Challenges and Trade-offs

| Benefit | Challenge |

|---|---|

| Data augmentation improves data diversity and mitigates sparsity. | Synthetic data might inherit or amplify biases from the generating model. |

| Bootstrapping allows fast generation of training samples for niche or emerging tasks. | Synthetic data quality control is non-trivial — poor-quality samples can mislead model learning. |

| Efficient for low-resource domains without large annotated corpora. | Potential for overfitting on model-generated artifacts instead of real-world language distributions. |

Fairness improvement via synthetic augmentation requires careful auditing of the synthetic dataset for unintended biases.

📌 Recent Research Trends Supporting This

-

Self-Training with LLM-generated Labels

- Recent papers like Self-Instruct (Wang et al. 2022) use LLMs to generate instruction-following data for themselves, achieving efficiency improvements.

-

Counterfactual Data Augmentation

- Studies in NLP fairness (e.g., Zhao et al. 2018’s work on gender bias) show how counterfactual data improves equitable treatment by models.

-

Knowledge Distillation & Model Bootstrapping

- Smaller models trained on both human and LLM-generated synthetic data have been shown to approximate LLM behavior efficiently, useful for edge or specialized applications.

📌 Good Practices

✔️ Use human-in-the-loop validation for synthetic data to catch biases or incoherencies.

✔️ Mix real and synthetic data carefully to avoid model overfitting on synthetic artifacts.

✔️ Regularly audit both model outputs and synthetic datasets for fairness metrics.

✅ Conclusion

Yes — when thoughtfully designed, synthetic data augmentation via bootstrapping with smaller models can enhance both the training efficiency and fairness of LLMs. It offers a practical way to expand and diversify data corpora, especially in low-resource or bias-sensitive applications.

Perfect — let’s outline both a conceptual pipeline and a couple of recent case studies where synthetic data augmentation via smaller models improved LLM training efficiency and fairness.

📊 Conceptual Pipeline: Synthetic Data Bootstrapping for LLM Training

flowchart TD

A[Collect Base Real-World Data] --> B[Train-Use Smaller Generative Models e.g., T5, GPT-2, Alpaca]

B --> C[Generate Synthetic Data paraphrases, counterfactuals, rare cases]

C --> D[Quality Control: Automatic & Human-in-the-loop Filtering]

D --> E[Augment Real Dataset with Synthetic Data]

E --> F[Fine-Tune - Pre-Train Larger LLM e.g. LLaMA 3, GPT-4]

F --> G[Evaluate for Efficiency Gains and Fairness Metrics]

G --> H[Deploy or Iteratively Improve]

📚 Notable Case Studies

📌 1️⃣ Self-Instruct: Bootstrapping Instruction-Tuning Data

- Authors: Wang et al., 2022

-

What they did:

- Used GPT-3 to generate thousands of synthetic instruction-following examples.

- Curated the outputs, filtered for quality and diversity.

- Fine-tuned smaller LMs on this synthetic dataset.

-

Results:

- Models achieved competitive performance on downstream instruction-tuning tasks at a fraction of the data collection cost.

Key takeaway: Self-generated synthetic data can bootstrap instruction-following abilities efficiently.

📌 2️⃣ Counterfactual Data Augmentation for Bias Mitigation

- Authors: Zhao et al., 2018 (Gender Bias in Coreference Resolution)

-

What they did:

- Created counterfactual examples by swapping gender terms (e.g., “doctor” with “he” vs. “she”).

- Trained and evaluated models on both original and counterfactual data.

-

Results:

- Significant reduction in gender bias in NLP models.

Key takeaway: Counterfactual augmentation improves fairness by balancing demographic representations.

📌 3️⃣ Alpaca: Instruction-Tuning on LLaMA with Synthetic Data

- Authors: Stanford CRFM, 2023

-

What they did:

- Used OpenAI’s GPT-3 to generate synthetic instruction-following data.

- Fine-tuned a smaller LLaMA-7B model (called Alpaca) on this synthetic dataset.

-

Results:

- Alpaca achieved comparable instruction-following performance to larger proprietary models.

- Open-sourced for the community to replicate.

Key takeaway: Smaller, bootstrapped synthetic datasets can produce lightweight, performant instruction-tuned LLMs.

📌 Fairness and Efficiency Metrics to Track

| Efficiency Metric | Fairness Metric |

|---|---|

| Reduction in annotation time | Demographic parity in generated outputs |

| Reduction in compute cost | Equalized odds for different groups |

| Improvement in few-shot performance | Counterfactual consistency |

| Data size vs. performance trade-off | Bias score delta before/after augmentation |

✅ Final Thought

Synthetic data bootstrapping — when paired with rigorous filtering and fairness auditing — offers a scalable, efficient, and ethically sound way to enhance LLM training pipelines.

The main idea of attention

The evolution of NLP has been as follows -

| Date | Model | Description |

|---|---|---|

| 1966 | Eliza | First chatbot |

| 1980s | RNN | last hidden state would have the compressed past context |

| 1997 | LSTM | The long term cell state will give equal importance to all the past hidden states |

| 2014 | Bahdanau Attention | Attention + RNN (RNN was used for both encoder and decoder) |

| 2017 | Attention + Transformer | RNN was removed and Transformer was introduced. But it had again both encoder and decoder. BERT is an example for encoder + decoder architecture. BERT paper |

| 2018 | Attention + General Purpose Transformer | No Encoder. Only decoder. Encoder as a separate block was removed from original transformer |

RNN - Recurrent Neural Networks

RNNs: Recurrent Neural Networks (RNNs) were the first to be used for sequence-to-sequence tasks, but they struggled with long-range dependencies.

Click to expand

LSTM - Long Short-Term Memory

LSTM: Long Short-Term Memory (LSTM) networks improved upon RNNs by introducing memory cells and gates, allowing them to capture long-range dependencies more effectively. But still the problem was that all the previous hidden states were used to generate the next hidden state, which made it difficult to focus on specific parts of the input sequence.

Click to expand

Attention Mechanism

Bhadanau Attention

Neural machine translation by jointly learning to align and translate (Bahdanau et al., 2014) is a seminal paper that introduced the concept of attention in neural networks. The paper proposed a method for aligning and translating sequences of variable lengths, which was particularly useful for machine translation tasks.

The key idea behind Bahdanau attention is to compute a context vector that summarizes the relevant information from the input sequence for each output time step. This is done by using a neural network to learn an alignment score for each input time step, which indicates how much attention should be paid to that time step when generating the output.

Attention: The attention mechanism was introduced to allow models to focus on specific parts of the input sequence when generating each output token. This was particularly useful for tasks like machine translation, where the alignment between input and output sequences is crucial.

Attention is about selectively accessing parts of input sequence with different weights, rather than using the entire input sequence equally. This allows the model to focus on relevant parts of the input when generating each output token.

For example to translate the sentence “I will eat” to French “Je vais manger”, the model can focus on the word “I” when generating the word “Je”, rather than considering all the words in the input sequence equally. That is the challenge, how to select which past tokens are how much important quantitatively for predicting the next token. This is done by what is known as attention scores for every hidden state.

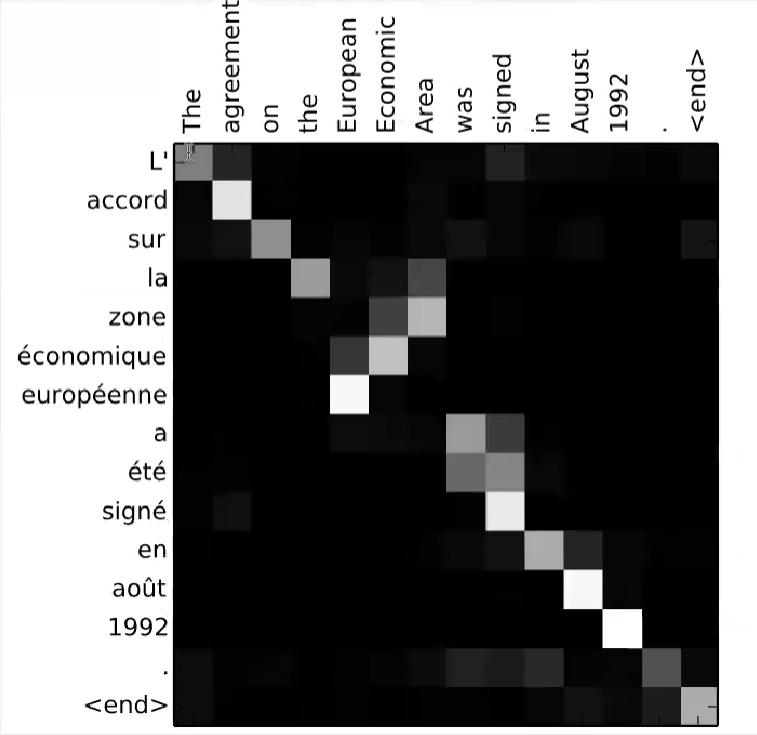

- The relevant past tokens might or might not be 1:1 corresponding with the next token. For example, in the figure below the correspondence is as mentioned below. In the sentence formation the French word for zone is written before that of the economic.

- French - zone economique europeenne

- English - economic zone area

Note that in the figure above, if there was a 1:1 correspondence only the diagonal elements would be highlighted. But there are many cells which are off diagonal that are highlighted.

Attention mechanism does two things as we see here - - translates the actual words.

- identifies the right order of the words. And this order can change from that of the input sequence, based on the construct of the language.

-

The Bhadanav Attention mechanism coupled RNN + Attention mechanism. It was not earlier imagined to be used for text summarization as well. It was primarily for text translation.

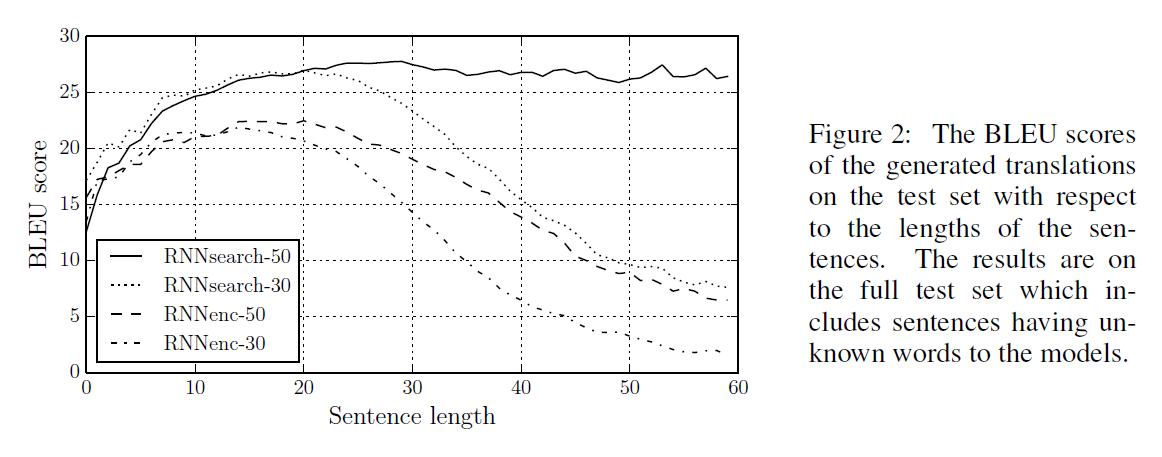

In the figure below, the BLEU score is used to measure the quality of the translation.

RNNsearch-50 and RNNsearch-30 are models that include attention mechanism. Others do not have the attention mechanism.

The LSTMs without Attention mechanism would start failing as the length of the input sentence keeps growing, as shown in the figure above. - Bhadanav attention mechanism was typically used for text translation. So, the attention was from the source language to the target language. The attention scores were calculated between the source and target language.

Self attention with trainable weights

-

In the above case where the Bhadanav attention was used, the attention scores were created to be able to translate the words of one language to the other.

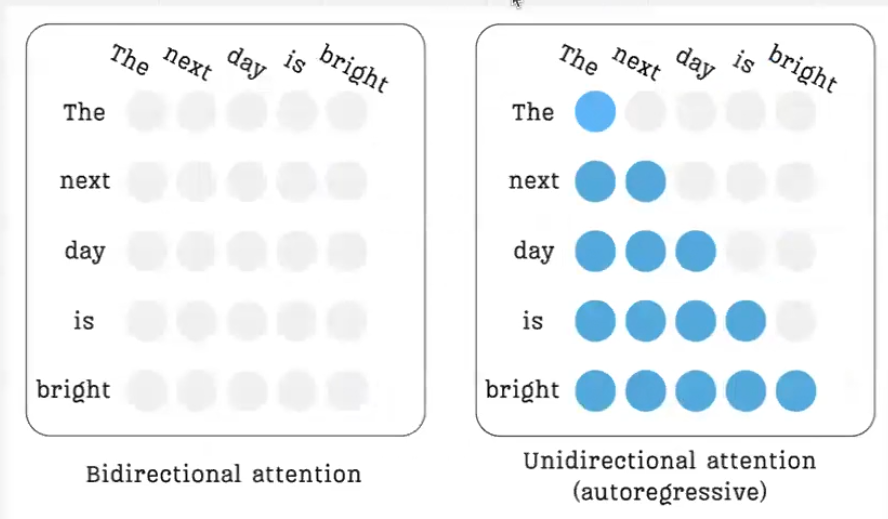

Self attention is the case where same concept is being used for text generation. So, source and targets are the same input text stream. This is known as Auto-regression. The model is trained to predict the next token in the sequence based on the previous tokens. The attention scores are calculated between the tokens in the same sequence.

When the attention mechanism directed such that the source and target is the same sequence of words, it is known as Self Attention. -

In self attention we look into only one sequence of words. This is the main mechanism that is used to predict the next token. We take one token, and check how much attention score will that token have for all the other tokens in the sequence.

For example, take the token - “next”. We need to find the attention scores of “next” with all the other tokens in the sequence, both front and back.

The token that we are focusing currently is known as query. The other tokens are known as keys. The attention scores are calculated between the query and the keys.

How to compute the attention scores?

Dot product

By taking the dot product we can get an intuitive attention score which will demonstrate the closeness of one query with the other keys. But the problem is that if their are two keys, whose magnitude is same but their directions are different, the value of dot products will be same. Like for example if we have the keys as follows, the magnitude of the key “dog” is the same as that of the key “ball”. In that case if we have a value say, “it”, the dot products will be same for both the cases.

Train a neural network

Start with a random initialized matrix for query and keys. And then give the right attention scores as inputs and train a neural network to learn the weights of the matrix.

Assume the embedding size is 3. And assume two weight matrices named Query and Key. The weight matrices are initialized randomly.

Query

The current word or token has a “question” about its context. “What should I pay attention to?”

Key

Every token provides a “key”, acting as a label or signal to answer queries from other tokens. It indicates : “Here is what information I can provide”

Value

Once a match (query - key) is found, this is the actual information provided. It says, “Here is the meaning or content you will get if you attend to me”

Self Attention : Workflow for creation of context vector

The big picture : End to end Workflow of Self Attention

Details of the Multi-head attention

Details of single head self attention

Details of the workflow of self attention

Implementation of Self attention

Step 1 : Start with the input embedding matrix

Embedding dimension or input dimension : 8

Context length : 5

import torch

inputs = torch.tensor(

[[0.43, 0.15, 0.89, 0.17, 0.23, 0.19, 0.38, 0.44], # The (x^1)

[0.55, 0.87, 0.66, 0.51, 0.49, 0.3, 0.2, 0.1], # next (x^2)

[0.57, 0.85, 0.64, 0.8, 0.1, 0.4, 0.21, 0.39], # day (x^3)

[0.22, 0.58, 0.33, 0.4, 0.4, 0.4, 0.1, 0.3], # is (x^4)

[0.77, 0.25, 0.10, 0.1, 0.9, 0.3, 0.3, 0.2]] # bright (x^5)

)

Illustration of the code

Step 2 : Set the input and output dimensions

d_in = inputs.shape[1]

d_out = 4

print(x_2)

print(d_in)

print(d_out)

Output >>

tensor([0.5500, 0.8700, 0.6600, 0.5100, 0.4900, 0.3000, 0.2000, 0.1000])

8

4

Illustration of the code

Step 3 : Initialize the weight matrices for query, key and value.

Wq, Wk, Wv are just trainable weight matrices. They have no relationships with the input embeddings of the text sequence.

torch.manual_seed(123)

W_query = torch.nn.Parameter(torch.rand(d_in, d_out), requires_grad=False)

W_key = torch.nn.Parameter(torch.rand(d_in, d_out), requires_grad=False)

W_value = torch.nn.Parameter(torch.rand(d_in, d_out), requires_grad=False)

print(W_query)

Output >>

Parameter containing:

### Wq is as follows.

### It is just a trainable weight matrix. It has no relationship with the input embeddings of the text sequence.

tensor([[0.2961, 0.5166, 0.2517, 0.6886],

[0.0740, 0.8665, 0.1366, 0.1025],

[0.1841, 0.7264, 0.3153, 0.6871],

[0.0756, 0.1966, 0.3164, 0.4017],

[0.1186, 0.8274, 0.3821, 0.6605],

[0.8536, 0.5932, 0.6367, 0.9826],

[0.2745, 0.6584, 0.2775, 0.8573],

[0.8993, 0.0390, 0.9268, 0.7388]])

print(W_key)

Output >>

Parameter containing:

tensor([[0.7179, 0.7058, 0.9156, 0.4340],

[0.0772, 0.3565, 0.1479, 0.5331],

[0.4066, 0.2318, 0.4545, 0.9737],

[0.4606, 0.5159, 0.4220, 0.5786],

[0.9455, 0.8057, 0.6775, 0.6087],

[0.6179, 0.6932, 0.4354, 0.0353],

[0.1908, 0.9268, 0.5299, 0.0950],

[0.5789, 0.9131, 0.0275, 0.1634]])

print(W_value)

Output >>

Parameter containing:

tensor([[0.3009, 0.5201, 0.3834, 0.4451],

[0.0126, 0.7341, 0.9389, 0.8056],

[0.1459, 0.0969, 0.7076, 0.5112],

[0.7050, 0.0114, 0.4702, 0.8526],

[0.7320, 0.5183, 0.5983, 0.4527],

[0.2251, 0.3111, 0.1955, 0.9153],

[0.7751, 0.6749, 0.1166, 0.8858],

[0.6568, 0.8459, 0.3033, 0.6060]])

Illustration of the code

Step 4: Computation of Query, Key and Value matrices

keys = inputs @ W_key

values = inputs @ W_value

queries = inputs @ W_query

print("keys.shape:", keys.shape)

Output >>

keys.shape: torch.Size([5, 4])

print("values.shape:", values.shape)

Output >>

values.shape: torch.Size([5, 4])

print("queries.shape:", queries.shape)

Output >>

queries.shape: torch.Size([5, 4])

Note the dimensions of the keys, values and queries. We have moved from din to dout.

Illustration of the code

finding the Q, K, V matrices for “next” token

Note : - The entire Wq is multiplied by the input vector to determine the Q for the input vector for “next”.

query_2 = x_2 @ W_query

key_2 = x_2 @ W_key

value_2 = x_2 @ W_value

print(query_2)

Each element of Q, K, V is a linear combination of the input embedding, done by scaling up using the weight matrices

Step 5: Compute the attention scores

attn_scores = queries @ keys.T

print(attn_scores)

Output >>

tensor([[ 8.7252, 10.8803, 11.0007, 7.7678, 9.7598],

[ 9.7351, 12.0370, 12.2923, 8.7149, 10.9628],

[10.4691, 12.9987, 13.1878, 9.3438, 11.8256],

[ 7.7531, 9.6199, 9.7608, 6.9217, 8.7864],

[ 8.8185, 10.9612, 11.1314, 7.8699, 9.8633]])

computing the attention scores for next token with next token w22

keys_2 = keys[1]

attn_score_22 = query_2.dot(keys_2)

print(attn_score_22)

Output >>

tensor(12.0370)

Generalizing this to get the attention scores for all keys for token “next”

attn_scores_2 = query_2 @ keys.T # All attention scores for given query

print(attn_scores_2)

Output >>

tensor([ 9.7351, 12.0370, 12.2923, 8.7149, 10.9628])

Step 6: Compute the attention weights

Why divide by sqrt(d_k)?

Reason 1: For stability in learning

The softmax function is sensitive to the magnitudes of its inputs. When the inputs are large, the differences between the exponential values of each input become much more pronounced. This causes the softmax output to become “peaky,” where the highest value receives almost all the probability mass, and the rest receive very little.

In attention mechanisms, particularly in transformers, if the dot products between query and key vectors become too large (like multiplying by 8 in this example), the attention scores can become very large. This results in a very sharp softmax distribution, making the model overly confident in one particular “key.” Such sharp distributions can make learning unstable

Illustration on how softmax() increases the magnitude of the result for higher values

import torch

# Define the tensor

tensor = torch.tensor([0.1, -0.2, 0.3, -0.2, 0.5])

# Apply softmax without scaling

softmax_result = torch.softmax(tensor, dim=-1)

print("Softmax without scaling:", softmax_result)

# Multiply the tensor by 8 and then apply softmax

scaled_tensor = tensor * 8

softmax_scaled_result = torch.softmax(scaled_tensor, dim=-1)

print("Softmax after scaling (tensor * 8):", softmax_scaled_result)

Output >>

Softmax without scaling: tensor([0.1925, 0.1426, 0.2351, 0.1426, 0.2872])

Softmax after scaling (tensor * 8): tensor([0.0326, 0.0030, 0.1615, 0.0030, 0.8000])

0.8000 is higher than other values in the order of 100x.

Reason 2 : To make the variance of the dot product stable

The dot product of Q and K increases the variance because multiplying two random numbers increases the variance.

The increase in variance grows with the dimension.

Dividing by sqrt (dimension) keeps the variance close to 1

Illustration on how the variance increases with the dimension of the vector

Imagine you’re rolling dice. Consider two cases:

Case 1: Rolling one standard die (1–6):

- The average (mean) : 3.5

- The variance : 2.9 (calculated as the average of the squared differences from the mean). The variance is relatively small.

Case 2: Rolling and summing 100 dice:

- The average (mean) : $100 \times 3.5 = 350$

- The variance : $100 \times 2.9 = 290$. The variance significantly grows.

Now, outcomes fluctuate widely (e.g., you might get sums like 320, 350, or 380). The distribution spreads out drastically. Outcomes become unpredictable.

Dot Product without normalization:

Think of dimensions as “dice.” Increasing the number of dimensions is like rolling more dice and summing results.

Each dimension (dice) contributes some variance. As dimensions grow, variance accumulates.

Result: Dot products (before softmax) become either extremely large or small, making attention weights unstable and erratic.

Dot Product with normalization (dividing by $\sqrt{d}$):

This effectively scales down the variance, ensuring the summed results remain stable.

It’s like taking the average roll per dice rather than summing them up, stabilizing your expected outcomes.

Result: Attention weights become more stable, predictable, and informative, enabling the model to learn effectively.

# dim = -1 implies the operation is done column-wise.

attn_weights_final = torch.softmax(attn_scores / d_k**0.5, dim=-1)

print(attn_weights_final)

row_sums = attn_weights_final.sum(dim=1)

print("\nSum of Each Row:")

print(row_sums)

Output >>

tensor([[0.1069, 0.3140, 0.3335, 0.0662, 0.1793],

[0.0980, 0.3099, 0.3521, 0.0589, 0.1811],

[0.0911, 0.3227, 0.3547, 0.0519, 0.1795],

[0.1162, 0.2954, 0.3170, 0.0767, 0.1947],

[0.1063, 0.3103, 0.3379, 0.0662, 0.1793]])

Sum of Each Row:

tensor([1.0000, 1.0000, 1.0000, 1.0000, 1.0000])

Step 7: Compute the context vector

context_vec = attn_weights_final @ values

print(context_vec)

Output >>

tensor([[1.3246, 1.5236, 1.8652, 2.3285],

[1.3301, 1.5304, 1.8753, 2.3433],

[1.3325, 1.5353, 1.8866, 2.3537],

[1.3211, 1.5153, 1.8390, 2.3002],

[1.3253, 1.5242, 1.8657, 2.3304]])

Modular implementation of self attention

Version 1

import torch.nn as nn

class SelfAttention_v1(nn.Module):

#### Step 1 : Initialize the weight matrices for query, key and value.

def __init__(self, d_in, d_out):

super().__init__()

self.W_query = nn.Parameter(torch.rand(d_in, d_out))

self.W_key = nn.Parameter(torch.rand(d_in, d_out))

self.W_value = nn.Parameter(torch.rand(d_in, d_out))

#### Step 2 : Compute the Query, Key and Value matrices

self.d_in = d_in

self.d_out = d_out

def forward(self, x):

keys = x @ self.W_key

queries = x @ self.W_query

values = x @ self.W_value

#### Step 3 : Compute the attention scores

attn_scores = queries @ keys.T # omega

#### Step 4 : Compute the attention weights (normalization and then softmax)

attn_weights = torch.softmax(

attn_scores / keys.shape[-1]**0.5, dim=-1

)

context_vec = attn_weights @ values

return context_vec

Example usage of computing the context matrix for any input embedding matrix

# Initializing the self attention with the input dimension (embedding) and the output dimension (context)

torch.manual_seed(123)

sa_v1 = SelfAttention_v1(d_in, d_out)

# Directly computing the Context matrix in one line, from any input embedding matrix

print(sa_v1(inputs))

Output >>

tensor([[1.3246, 1.5236, 1.8652, 2.3285],

[1.3301, 1.5304, 1.8753, 2.3433],

[1.3325, 1.5353, 1.8866, 2.3537],

[1.3211, 1.5153, 1.8390, 2.3002],

[1.3253, 1.5242, 1.8657, 2.3304]], grad_fn=<MmBackward0>)

Version 2 : Using nn.Linear to initialize the weight matrices for query, key and value. This makes the training stabler.

- The random seeds are chosen by the linear neural network

- Also Q, K, V are just nn.Linear(din, dout, bias=false). This computes the output layer of the nn in a way it does the sum of products anyways. So, matrix multiplication is not done explicitly but $nn.Linear()$ is used to determine Q, K, V matrices.

- Here linear neural network means that output is a linear combination of the input. The output is a weighted sum of the inputs. The weights are the parameters of the linear layer, and they are learned during training. The bias term is also a parameter that is learned during training. The output is computed as follows:

$output = (W \cdot input + b)$ where W is the weight matrix and b is the bias vector. - Here the non-linear layer of the neural network is not used. That is implemente by passing the output of the linear layer into the activation function.

class SelfAttention_v2(nn.Module):

def __init__(self, d_in, d_out, qkv_bias=False):

super().__init__()

self.W_query = nn.Linear(d_in, d_out, bias=qkv_bias)

self.W_key = nn.Linear(d_in, d_out, bias=qkv_bias)

self.W_value = nn.Linear(d_in, d_out, bias=qkv_bias)

def forward(self, x):

keys = self.W_key(x)

queries = self.W_query(x)

values = self.W_value(x)

attn_scores = queries @ keys.T

attn_weights = torch.softmax(attn_scores / keys.shape[-1]**0.5, dim=-1)

context_vec = attn_weights @ values

return context_vec

torch.manual_seed(789)

inputs = torch.tensor(

[[0.43, 0.15, 0.89, 0.17, 0.23, 0.19, 0.38, 0.44], # The (x^1)

[0.55, 0.87, 0.66, 0.51, 0.49, 0.3, 0.2, 0.1], # next (x^2)

[0.57, 0.85, 0.64, 0.8, 0.1, 0.4, 0.21, 0.39], # day (x^3)

[0.22, 0.58, 0.33, 0.4, 0.4, 0.4, 0.1, 0.3], # is (x^4)

[0.77, 0.25, 0.10, 0.1, 0.9, 0.3, 0.3, 0.2]] # bright (x^5)

)

d_in = 8

d_out = 4

sa_v2 = SelfAttention_v2(d_in, d_out)

print(sa_v2(inputs))

Output >>

tensor([[ 0.0174, 0.0553, -0.1093, 0.1026],

[ 0.0175, 0.0556, -0.1089, 0.1024],

[ 0.0175, 0.0559, -0.1087, 0.1022],

[ 0.0179, 0.0544, -0.1091, 0.1028],

[ 0.0172, 0.0543, -0.1105, 0.1032]], grad_fn=<MmBackward0>)

Step 8 : Causal Attention

The attention weights computed above in the self attention method are for all tokens.

But the attentions weights for future queries are not required, when computing the attention scores for the current query as per the auto-regressive self attention.

Minus Infinity Mask

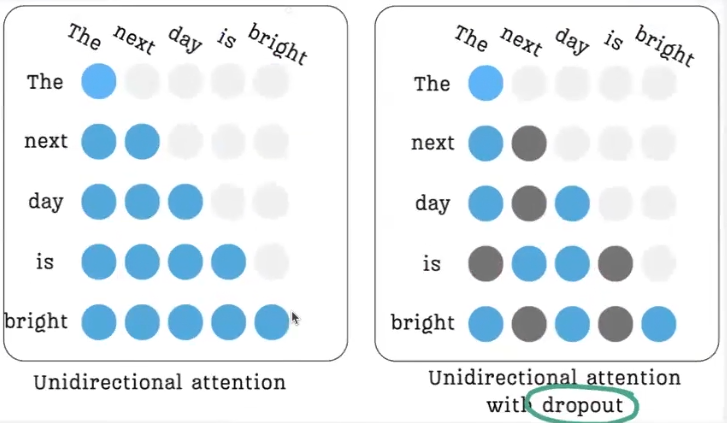

Uni-direction attention with Dropout

There are some neurons which do no to any work in a neural network. Dropout is added to avoid this lazy neuron problem. This basically randomly offs some neurons in the network. This is done to avoid overfitting. The dropout rate is typically set between 0.1 and 0.5, meaning that 10% to 50% of the neurons are randomly turned off during training.

This forces the network to learn more robust features and prevents it from relying too heavily on any single neuron.

In a particular iteration the grayed cells are the neurons that are turned off. In some other iteration other neurons will be randomly turned off.

Multi-head attention

In the single head causal self attention explained above we have only one attention head. This means that the model is only able to focus on one perspective of the input sequence at a time. This can be limiting, especially for complex tasks where multiple parts of the input sequence may be relevant at the same time.

For example, consider this sentence : The artist painted the portrait of a woman with a brush

Layer normalization

Layer normalization is done on input embedding. Information is not lost because it is relative scaling.

Layer normalization is needed to handle the following -

Vanishing or exploding gradient problem

Gradients need to be constrained. If the gradients are too large, the model will not be able to learn. If the gradients are too small, the model will not be able to learn. This is known as the vanishing gradient problem. The gradients need to be constrained to a certain range. This is done by using layer normalization.

The output layer plays an important role in determining the gradient of a layer.

Feed-forward Neural Network

Disadvantages of Relu

- not differentiable between negative and zero inputs.

- when input is negative, relu is zero. So the gradient is zero. This is known as the dying relu problem. Dead Neuron. Neuron is dead. It is not able to learn anything.

Leaky relu

GELU activation function

Covariate shift

Value clipping

All the illustrations and mindmaps referenced in this article :

References

- What is the big deal about attention - vizuara substack

- Attention is all you need - arxiv

- Attention mechanism 1 hour video